Using Terraform & Ansible to deploy a Kubernetes cluster with Kubespray

Note: This tutorial aims to provide guidance on deploying a Kubernetes cluster using Kubespray and Terraform. However, we don't recommend using this tutorial to create Kubernetes clusters and deploy applications on it. We recommend following our updated tutorial on how to Deploy applications on Kubernetes cluster with Rancher. Our Containerspaces solution offers a simpler and more efficient approach to accomplish this objective. You can still utilize this tutorial as a reference for using Terraform on our whitesky.cloud BV portal.

Note: Kindly note that we do not offer support for this tutorial as it is no longer maintained.

This tutorial targets whitesky.cloud BV's users willing to deploy applications on a redundant Kubernetes cluster using Kubespray and Terraform.

Kubernetes is a cluster orchestration system widely used to deploy and manage containerized applications at scale. Kubernetes ensures high availability provided through container replication and service redundancy. This means that if one of the compute nodes of the cluster fails, Kubernetes' self-healing guaranties that the pods deployed on the faulty node can escape and be redeployed on one of the healthy nodes.

While containerized applications are typically stateless and pods running them can be easily redeployed, moved or multiplied, actual data should be stored in a persistent back-end to be accessible for the pods across the cluster. To add a persistent back-end to the Kubernetes cluster, you can define a storage object - Persistent Volume (PV). Any pod can use a Persistent Volume Claim (PVC) to obtain access to the PV. whitesky.cloud BV uses portal-whitesky-cloud-csi-driver to dynamically provision PVs on the cluster. portal-whitesky-cloud-csi-driver is a plugin used to spawn a data disk to act as a persistent storage for the Kubernetes cluster.

This tutorial shows an example on how to set up a Kubernetes cluster with persistent volumes using the portal-whitesky-cloud-csi-driver using Terraform and Ansible. On top of our kubernetes cluster we will use helm to deploy a working wordpress website with TLS enabled. All automated so you'll never need to worry about updating your certificate.

Prerequisites

Needed for kubernetes cluster

- SSH key pair (Create your own)

- JWT for the API

- Your customer name

- Access to a particular location

-

Example terraform, ansible and helm files:

-

Ready to use portal-whitesky-cloud-terraform-provider

- Terraform v0.14.7

- whitesky.cloud BV's terraform provider (see Terraform Plugin User Guide)

Needed for wordpress website with TLS

A (sub)domain name pointing towards the Public CS's IP (will be provided later in the tutorial)

Architecture

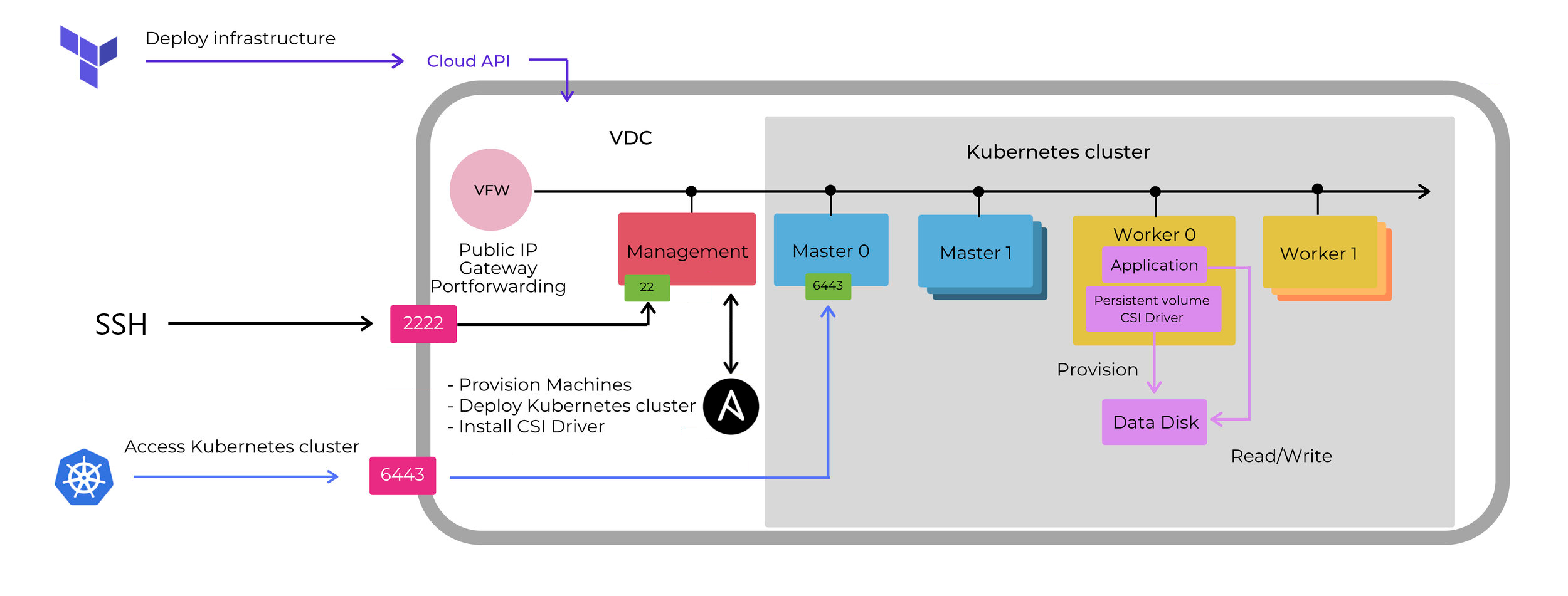

The design used for the Kubernetes cluster deployed in a dedicated cloudspace shown below.

- whitesky.cloud BV's API is used by Terraform to deploy a cloudspace with nodes/configurable.

- Nodes of the cluster are deployed on a dedicated cloudspace. All nodes in the cloudspace have IP addresses on the internal network managed by the Virtual Firewall(VFW) of the cloudspace. The VFW has a routable IP address and it is responsible for providing the Internet access and port forwarding for the machines on its private network.

- Port 22 of the management node is forwarded to 2222 of the VFW to use it as an SSH Bastion Host. We will run ansible from our bastion host. (It's also possible to run ansible on your local host and the management node as a bastion host with ansible. We chose to do it differently here and use the management node to deploy kubernetes and helm) Ansible playbooks are responsible for installing the Kubernetes cluster itself and CSI driver on the cluster nodes.

- Port 6443 (standard port of Kubernetes API server) of the master node is forwarded to 6334 of VFW to enable access to the Kubernetes cluster.

- Physical storage instance is a data disk attached to one of the workers. The persistent storage on the cluster is managed by the portal-whitesky-cloud-csi-driver. The CSI driver consists of several pods responsible for creating, provisioning and attaching the datadisk to the correct host. Persistent storage is created on the cluster by means of a Storage class, configured to use the portal-whitesky-cloud-csi-driver as a back-end for the PVC. If the worker node hosting the persistent disk is removed from the cluster, CSI driver pods will be redeployed and the persistent disk will be reattached to one of the available workers.

- Application runs in a Kubernetes pod and can consist of several containers sharing access to the storage instance. To enable read/write operations to the storage, mount the persistent volume claim (PVC) to your app.

Deploy nodes with Terraform

Overview of necessary terraform files

main.tf- the primary entrypoint, for this tutorialmain.tfincludes all resources.variables.tf- collection of the input variables used in the Terraform module.terraform.tfvars- values of the input variables can be set here. First step will be to copy theterraform.tfvars.exampleso we can start adjusting our variables.

Terraform providers

main.ft addresses three types of Terraform providers to build the infrastructure:

# check installed providers

terraform providers

Providers required by configuration:

.

├── provider[registry.terraform.io/hashicorp/null]

├── provider[registry.terraform.io/hashicorp/local]

└── provider[registry.terraform.io/portal-whitesky-cloud/portal-whitesky-cloud] ~> 2.0

provider.portal-whitesky-cloud/portal-whitesky-cloud supports resources:

- portal-whitesky-cloud_cloudspace

- portal-whitesky-cloud_machine

- portal-whitesky-cloud_disk

- portal-whitesky-cloud_port_forward

These are responsible for creating and managing cloudspaces, virtual machines, disks and port forwards correspondingly.

SSH access to the cluster. Resource portal-whitesky-cloud_machine is additionally responsible for creating users and uploading SSH keys to the virtual machines. Current configuration provisions your public SSH key provided in terraform.tfvars for the user ansible on the management node and for users ansible and root on the master and worker nodes. In current configuration you can establish an SSH connection to the management node with ssh -A ansible@<IP_ADDRESS> -p 2222 and from there you can SSH to both ansible and root users on the masters and workers. Should you need to configure any additional users/upload keys at the creation time, provide an adequate user_data attribute to the portal-whitesky-cloud_machine resources of the Terraform configuration (see Terraform Plugin User Guide).

-

provider.nullsupportsnull_resourceblocks used to provision machines on the cluster with necessary configuration, in this case we configure ansible user access and mount data disks on the Kubernetes nodes. Provisioning of the cluster nodes is only possible via the management node, where SSH port is open. To use the management node as a jump/bastion host we specify the IP address and the user of the management node as bastion-user and bastion-host in the connection block of the provisioner. For more details see Terraform documentation on how to configure a Bastion Host. -

provider.localsupports resources on the local host. This will mainly be used to do some templating magic.

Preparing the required files

Adjust the terraform.tfvars to deploy a kubernetes ready set of linux servers.

-

Copy

terraform.tfvars.exampletoterraform.tfvars -

Adjust the customer_id, client_jwt, cloudspace_name, location and external_network_id inside

terraform.tvars- external_network_id - You can find the external networks for your chosen location by using the API:

/customers/{customer_id}/locations/{location}/external-networks- Get the correct external_network_id which represents the external network connected to the internet.

- external_network_id - You can find the external networks for your chosen location by using the API:

Deploy your infrastructure

-

Initialize your terraform directory

terraform init -

Apply your terraform configuration

terraform apply -

Wait for all resources to be deployed. The public IP of your cloudspace will be outputted at the end.

Note: This IP address as it will be required for further configuration.

Deploy your Kubernetes Cluster

Steps to deploy the kubernetes cluster

-

SSH into your kube-mgt vm (

ssh -A -p 2222 root@PUBLIC_IP)-

Windows only

-

Copy your private key to the kube-mgt so the vm has access to the other vm’s

scp -P 2222 LOCATION_OF_ID_RSA root@PUBLIC_IP:/root/.ssh/id_rsa -

ssh to kube-mgt and do

chmod 400 ~/.ssh/id_rsato set the correct permissions for you private key

-

-

-

Go into the ansible directory

cd ansible -

Clone kubespray repository and checkout tag v2.16.0.

You can checkout a more recent tag if you want to (or master). We're using this tag because this one is tested multiple times and works.

git clone https://github.com/kubernetes-sigs/kubespray.git cd kubespray/ git checkout tags/v2.16.0 -b myBranch -

Install requirements using pip3

pip3 install -r requirements.txt -

Copy example group_vars and our inventory file

cp -rfp inventory/sample inventory/mycluster cp ../inventory.ini inventory/mycluster #copy generated inventory file by terraform -

Configure variables

Configure variables in inventory/mycluster/group_vars/k8s_cluster/k8s-cluster.yml

cluster_name: "<NAME_OF_CLUSTER>"supplementary_addresses_in_ssl_keys: [<PUBLIC_IP>]

Configure variables in inventory/mycluster/group_vars/k8s_cluster/k8s-net-calico.yml

calico_mtu: 1400

-

Execute playbook

ansible-playbook -i inventory/mycluster/inventory.ini cluster.yml -v -bThe installation can take a few minutes. In the terminal you can see the Kubespray progress and logs.

-

Copy your kubeconfig from the master node and adjust the IP

mkdir /root/.kube scp MASTER_NODE_IP:/etc/kubernetes/admin.conf /root/.kube/config- Change

server: https://127.0.0.1:6443tohttps://MASTER_NODE_IP:6443

Optional: Copy your kubeconfig to your localhost and change the IP to the public IP of the cloudspace

- Change

Check you kubernetes cluster

Install kubectl binary

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

kubectl get nodes # All nodes should be ready

kubectl get pods -n kube-system # All pods should be running

Steps to deploy the CSI-Driver

-

Check if ansible files are present on the jumphost (should have been copied in step 2 of deploying the kubernetes cluster)

cd /root/ansible ls -alh -

Adjust the needed variables in

install-csi-driver.yml:vi install-csi-driver.yml- customer_id

- client_jwt

-

Run the ansible-playbook

ansible-playbook install-csi-driver.yml -

Check if all pods are up in the csi-driver namespace (You might need to wait a bit until they are).

kubectl get pods -n csi-driver -o wideEnsure running: an attacher pod, a provisioner pod and for each Kubernetes worker - a driver-* pod.

Deploy your wordpress using Helm

Install helm to deploy applications

Use the following snippet to install helm.

```

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3

chmod 700 get_helm.sh

./get_helm.sh

```

Install Ingress, Cert-Manager and a Wordpress website using helm

To give an example of an application that can be deployed on a Kubernetes cluster and make use of the CSI driver lets install a wordpress website with TLS enabled.

For this task we will use Helm - the package manager for Kubernetes.

-

Install ingress

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx helm repo update helm install ingress ingress-nginx/ingress-nginx -f helm/ingress-values.yaml -

Install Cert-Manager to manage certificates.

helm repo add jetstack https://charts.jetstack.io helm repo update kubectl create namespace cert-manager kubectl apply --validate=false -f https://github.com/jetstack/cert-manager/releases/download/v0.14.1/cert-manager.crds.yaml kubectl apply -f helm/letsencrypt-issuer.yaml helm install cert-manager --namespace cert-manager jetstack/cert-manager --version v0.14.1 -

Deploy letsencrypt-issuer (Let's Encrypt is a free to use CA)

Adjust the emailaddress in helm/letsencrypt-issuer.yaml

kubectl apply -f helm/letsencrypt-issuer.yaml -

Deploy your wordpress

Adjust the variables in helm/wordpress-values.yaml:

hostname: example.com # This needs to be a valid domain, pointing towards the public IP of your cloudspace. ... wordpressUsername: user wordpressPassword: user1234 wordpressEmail: user@example.com wordpressFirstName: Bob wordpressLastName: Ross wordpressBlogName: testsiteNow you can deploy your wordpress cluster

helm repo add bitnami https://charts.bitnami.com/bitnami helm repo update helm install wordpress bitnami/wordpress -f helm/wordpress-values.yaml

Notes

In case the worker hosting CSI driver is deleted, Kuberentes will create it again on another worker. Note that Kubernetes will not move the pods if the node is unreachable as a result of stopping, deleting or having network issues. This logic ensures against split-brain issues that can occur if the lost worker comes back online after the pods were recreated on another node (see Documentation).

In order to force moving persistent storage and CSI driver pods to another worker, you should delete the unreachable worker from the cluster

kubectl delete node worker1

You can check nodes that are unreachable (state not ready) by executing

kubectl get nodes